Tim Berners Lee issued an epistle recently, a call to action to save the web from some dangers which concern him.

One of them “misinformation” (or “fake news” as it rather more commonly and hysterically known). It’s a problem, he says. Everyone says it, and they’re right. Tim doesn’t identify the solution but he does have an interesting comment about the cause.

In fact the roots of the misinformation problem go right back to the birth of the web and the panglossian optimism that a new environment with new rules could lead to only good outcomes. The rights of creators, their ability to assert them and the failure of media business models on the web are at the heart of the problem – and point the way to solving it.

The problem

“Today, most people find news and information on the web through just a handful of social media sites and search engines” says Tim. Interestingly, he doesn’t mention news products or sites as a source of news.

He is definitely right about the immediate cause of the problem. But why is it that social media and search are the leading sources of news? Why is it that fake news is more likely to thrive there? Could it be something to do with the foundations of the web that Tim himself helped create?

Tim is not a fan of copyright. “Copyright law is terrible”, he said in an interview three years ago.

He is not alone in the view that copyright is incompatible with the web. In fact, the web has largely ignored copyright as it has developed, as if it’s just an error to be worked around.

However innocuous and idealistic this might have seemed at the start, it has evolved into a crisis for the creative sector, which finds it ever-harder to generate profits from their online activities.

But it has been a boon for the social media sites and search engines Tim talks about. They depend completely on the creative output of others. If you deleted all the content created by others from Google search and Facebook, what would be left? Literally nothing. It’s important for those businesses that content stays available and stays free.

So we find ourselves in an era when so-called “traditional” news media continues to struggle and the panic about “fake news” is growing ever greater. This is not a coincidence.

Fake or true is about trust

News is, at least in part, a matter of trust. You see a piece of information somewhere. Should you trust it? Is it true? What is this news and who is giving it to me?

The answer is usually a matter of context. If you saw something in, for example, a newspaper you know and trust, you’re more likely to trust it. Stripped of meaningful context, or presented in a misleading context, it’s much harder to know whether to treat something posing as news should be believed.

The social media sites and search engines which now bring us our news show us things which they call news but which they have harvested elsewhere. They didn’t create it, they can’t vouch for it, they don’t and can’t stand behind it.

But they create their own context, using algorithms which, like all algorithms, open to being gamed and abused.

These platforms are also widely trusted by their users. They create a false trust in information which, simply because of the fact that they fed it to someone, their users are predisposed to believe.

Their ability to analyse our personal data and put a personal selection in front of every user, makes it worse. No two users of Facebook ever see quite the same thing. Each has their own editor which reflects and confirms that person’s prejudice. Is this really the best way for people to find out about the world?

Who wins?

The reason it works this way is, of course, financial. The currency being traded is clicks – the desire for a user to interact with a piece of content or an ad. Pieces of content exist on their own, outside a product from which they were removed by the platforms and re-purposed as free and plentiful raw material for their click-creating, algorithm driven, machine.

Money is made from all this, but very few of the players get to make it. By far the lions share goes to the social networks and search engines, specifically Google and Facebook. They control the personal data which underlies the whole activity, and they operate at such gigantic scale that even tiny amounts of money resulting from a user doing something are magnified by the sheer volume of activities.

That’s why they rely on machines to do the editing. Anything else would be catastrophically inefficient.

In response to the Fake News hysteria that they are belatedly trying to distinguish between fake and true news, but of course they’re doing it using algorithms and buzzwords, not people.

Employees are expensive and silicon valley fortunes depend on using them as little as possible. They’re not “scalable”.

Who loses?

So it comes as no surprise that the person who usually does worst in this whole new media landscape is the person who actually created the content in the first place. They couldn’t help investing time and money in doing so.

Yet, however popular their work turns out to be, they struggle to make money from it because the money-making machinery of the internet us all built around automation. The work of creators can be automatically exploited, ultra-efficiently, without payment and without restraint by others. No wonder they do it.

But it’s not hard to see that it’s a perverse situation which concentrates revenue in the wrong place. Not only is that obviously unfair, it also gives rise to deeper problems, including fake news.

So the rest of us, the so-called end users, are collateral damage. We’re the ones caught in the middle, on the one hand being used as a source of advertising revenue for the giant platforms, on the other being fed this unreliable stream of stuff labelled, sometimes falsely, as “news”.

It’s important that creators can make money from their work

The inability to make money from content, particularly news content, gives rise to some very undesirable outcomes.

The rationale for investing in creating news content is undermined. It’s expensive and inefficient, and increasingly hard to make profitable in an internet which is optimised for efficiency and scalability. So news organisations cut costs, reduce staff, rely more on third parties. Less original news is created professionally.

Third parties sometimes step into the void to generate news and provide information. But they aren’t always ideal either. Often they are partisan, offering a particular point of view and have a principal loyalty not to the readers but to the agenda of their clients. PR people and spin doctors, for example, who have always been there trying to influence journalists and who can now, often, bypass them.

Others are more insidious. They might present themselves as experts, impartial or legitimate news organistions but in fact have another agenda altogether. Ironically, some of them might find it easier to sustain themselves because their primary goal is influence, not profit – their funders measure the rewards in other ways.

Some news organisations, for example, are state funded and follow an agenda sanctioned by their political paymasters. Others hide both their agenda and their funding and present themselves alongside countless others online as useful sources of information.

We can see where fake news comes from.

Products matter more than “content”

It’s made worse by the habit of the big platforms to disassemble media products into their component pieces of content, and present them individually to their audiences.

A newspaper, made up of a few hundred articles assembled from hundreds of thousands made available to the editors, is disassembled as soon as it’s published and turned into a data stream by the search and social algorithms.

The data stream, with every source, real and fake, jumbled up together is then turned back into a curated selection for individual users. This is done not by editors but by algorithms which present reliable and unreliable sources side-by-side and without the context of a surrounding product.

The cost of “free”

The consumer, as Tim Berners-Lee points out and frets about, is the victim of this. They don’t know when they’re being lied to, they don’t know who to trust. They might, understandably, invest too much trust in the platforms which are, in fact, presenting them with a very distorted perspective.

Their data and other peoples content is turned into huge profits for the platforms, but at the cost of undermining the interests of each individual user and, therefore, society as a whole.

Think about the money

When considering how this problem might be solved we have to think about the money.

For news organisations to be able to invest in employing people and creating news, two interlinked factors are essential.

The first is that they need to be able to make enough money to actually do all that. They need to make more than they spend. Profit is not a distasteful or optional thing, it’s an absolute necessity.

The more, the better because it encourages competition and investment.

The second is that the profit needs to be driven by the users. The more people are seeing of your product, the more opportunity to make money needs to arise – therefore the more you need to invest in delighting users and being popular by having a great product.

Running to stand still

This isn’t necessarily what happens when revenue is generated from advertising. Yields and rates tend to get squeezed over time, so even maintaining a certain level of revenue requires growth in volume every year. For many digital products, this means more content, more cheaply produced, more ads on every page. And, often, higher losses anyway.

When money is algorithmically generated from the advertising market, nearly all of it passes through the hands of a couple of major platforms. Their profits aren’t proportional to their own investment in the content they exploit, but to that of others. Good business, of course, and fantastically profitable.

Their dominance of the market, enabled by the internet, is unconstrained by regulators or effective competition. http://precursorblog.com/?q=content/look-what’s-happened-ftc-stopped-google-antitrust-enforcement This causes the profits to accumulate in great cash oceans in silicon valley, inaccessible and useless to the creators and media businesses whose search for a viable business model goes on.

The only other way

The only way for media products to make money, other than from advertisers in one form or another, is from their users directly.

Where revenue is earned by delighting consumers, their trust has to be earned and preserved. When those users are paying for your product, and choose whether to pay or not, pleasing them becomes more important than anything else.

Then the playing field for the fake news content and products gets tilted the other way by journalism which can not only afford to, but has to, shine a spotlight on the lies and dishonesty of others and where investment is rewarded by profit.

Tim Berners-Lee is wrong to hate copyright

This is why Tim Berners-Lee and others are wrong about copyright in the digital age. It might have seemed wrong to them when seen against the backdrop of an idealistic, utopian vision of the digital future.

But seen in the rather uglier light of today’s online reality its virtues are rather more apparent.

Copyright is a human right

Copyright gives creators some control over the destiny of their work. It applies to everyone who creates anything – that means you and I as well as so-called “professionals”.

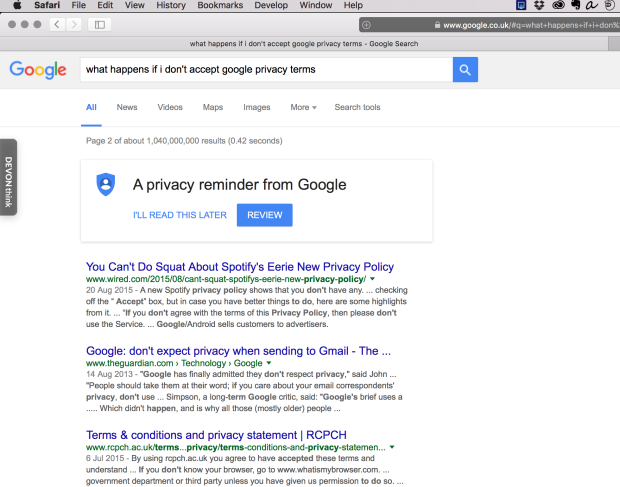

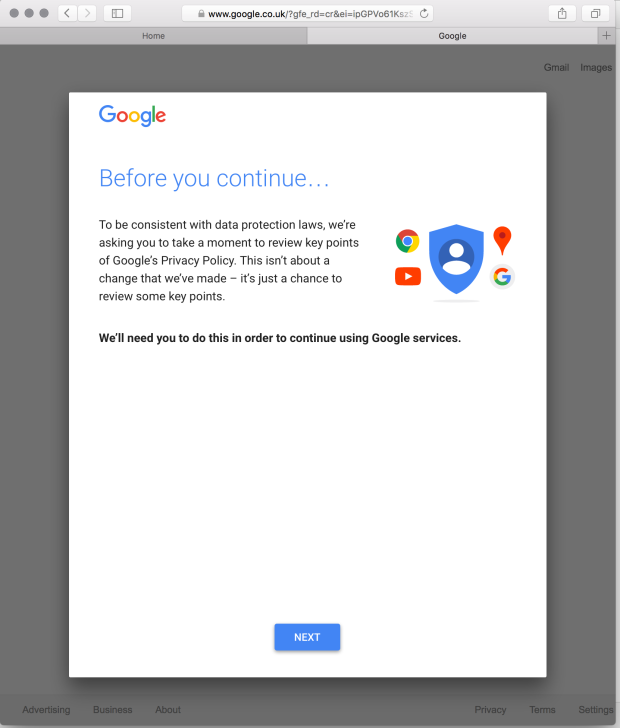

Tim argues obsessively that everyone should have the right of control over data that is generated about them – privacy is his great hobby horse.

But he has argued the opposite about the near identical rights that copyright already gives to the creative works people create themselves.

The web isn’t the utopia everyone hoped for

The time has come for Tim Berners-Lee and others to acknowledge the mistake they have made about copyright. Arguing that it should be weak or non-existent doesn’t just help concentrate power and money in the hands of a tiny cadre of internet oligarchs, destroying opportunity for others at the same time.

It also destroys the economic basis for a plural, free and fearless press. It makes the space for misinformation and fake news. It betrays its users with the false promise of something for nothing. The price we really pay for the “free” web is becoming more and more obvious.

We are seeing right now how dangerous that false promise is.

It might not be fashionable but we can learn the lessons of history here. Copyright works. The idealism of the early internet has encountered a number of reality checks but the strange antipathy towards copyright has persisted and every attempt to change it has been rebuffed.

When wondering why this might be don’t forget to consider those oceans of cash swilling around on the west coast of America and ask the question “who benefits from this?”

It certainly isn’t the rest of us.